Welcome to the weekly fraud digest from innerworks, a London-based cybersecurity company specialising in state-of-the-art bot detection, authentication and device fingerprinting solutions.

👨🏾💻 Biometric solutions no longer working to fight fraud in Africa:

Despite biometric solutions like FaceID bringing fraud down by 25% last year in Africa, the rise of AI is quickly challenging its ability to secure the continents population. With a rise witnessed in assisted account takeovers, identity farming, and AI-generated forgeries. Read more here!

💵Finance industry fraud losses in the U.S. estimated to reach $40 billion by 2027:

Wall Street Journal Watch dog reports on the impact Gen-AI is set to have on fraud losses specifically in financial services from account takeover to fake account creation. The Financial Industry Regulatory Authority are focussed on this as a key emerging threat Read more here!

🤯DeepSeek AI enters the market and is accused of fraud in the same week:

DeepSeek AI made headlines with its rapid market entry and advanced AI model, but faced fraud allegations for allegedly copying OpenAI and Microsoft's technology. This highlights rising tensions in global AI competition. Read more here!

OpenAI and Microsoft accuse DeepSeek AI of fraud.

DeepSeek AI dominated the headlines this week with industry giants like OpenAI, Microsoft, and even Trump administration claiming that DeepSeek trained AI off stolen data. The debate is surrounding a technique called "distillation" where DeepSeek will re-use responses from existing AI models to train new models.

This reinforced learning model is being debated across the industry and highlighting the grey areas of AI IP (intellectual property) and deepening the intensity of US-Chinese tech relationship coming quickly after the US banned TikTok earlier this month. OpenAI's Sam Altman previously said they spent more than $100 million to train GPT-4 whilst DeepSeek says it spent less than $6 million. This amongst other signs are leading people to accuse them of ‘IP theft.’

DeepSeek AI is an open source, seemingly cheaper rival to OpenAI and felt like it entered the market overnight but it certainly hasn’t been smooth sailing; with experts warning people against using the service due to concerns about where the data is being stored and how it might be used by the Chinese government. DeepSeek AI is also experiencing cybersecurity attacks of its own stating that there have been large scale malicious attacks on it’s software.

Large Banks are Investing in AI Solutions to Fight Fraud.

Large banks are making it clear that the way to fight fraud is to utilise AI and their investments into the space through acquisitions make this clear. Following on from Visa’s most recent acquisition of antifraud company Featurespace that closed at the end of last year, they are continuing with heavy investment in the space. In the past decade, it’s allocated $3 billion toward AI and data infrastructure.

Visa isn’t the only financial institution committing to AI & anti-fraud solutions:

Mastercard announced its plan late last year to acquire Recorded Future for $2.65 billion, a cybersecurity firm that provides ‘intelligence signals’ by analyzing a broad set of data sources to provide insights that enable its customers to take action to mitigate risks.

Deutsche Bank announced a multi-year innovation partnership with NVIDIA to accelerate the use of artificial intelligence (AI) and machine learning (ML) in the banking sector. Specifically for intelligent avatars, speech AI, and fraud detection.

“AI, ML and data will be a game changer in banking, and our partnership with NVIDIA is further evidence that we are committed to redefining what is possible for our clients,” Christian Sewing, CEO, Deutsche Bank.

The investment in AI-led anti-fraud solutions is focused not only on detection but also on real-time customer service support during fraud attacks. Companies that can improve response speed and deploy sophisticated AI agents to resolve customer queries effectively are likely to come out on top.

💬 90% of enterprises researching AI but slow to input into TRiSM (Trust, Risk and Security management)

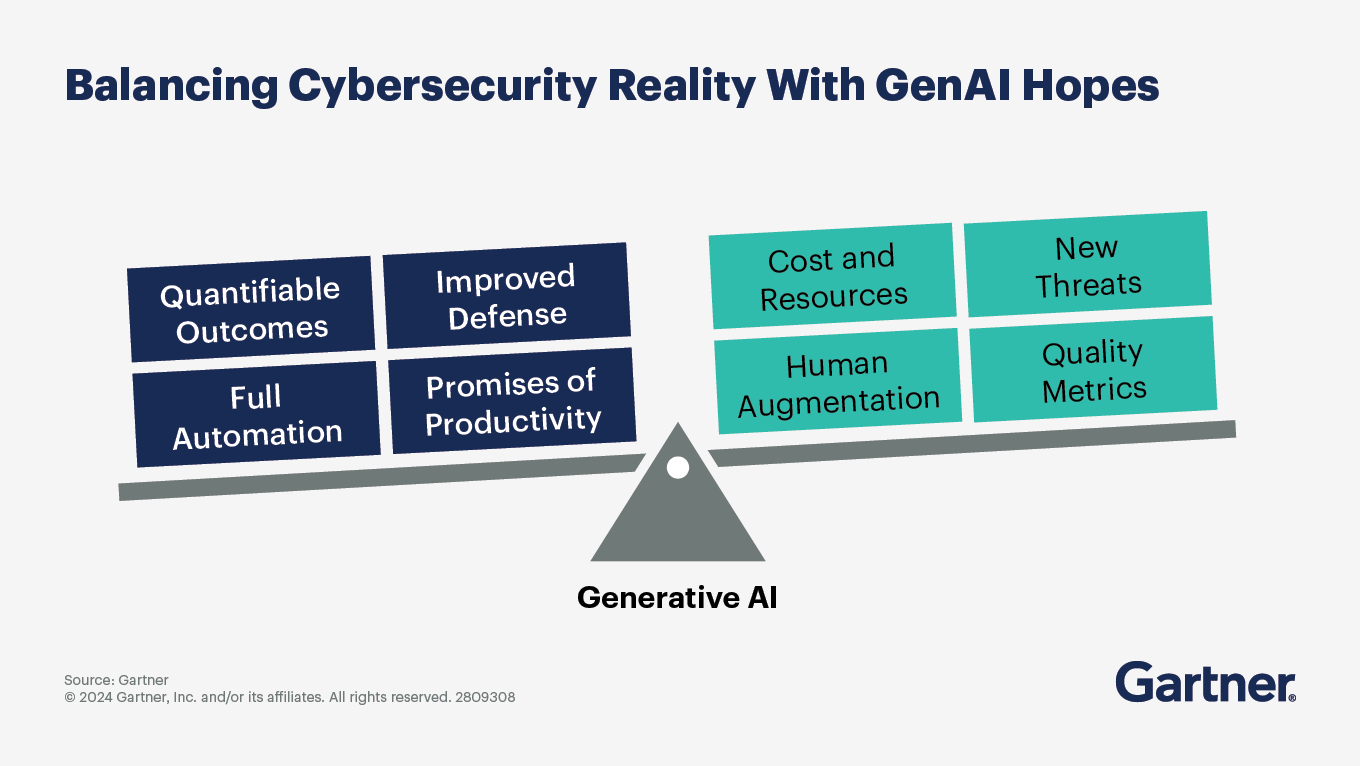

Gartner has released an advisory report on how security professionals can best harness GenAI. Specifically predicting that “Through 2025, GenAI will cause a spike of cybersecurity resources required to secure it, causing more than a 15% incremental spend on application and data security.” Gartner makes clear the importance of GenAI governance alongside this rapid adoption.

They state that 90% of enterprises are researching or piloting AI but need to act fast to reduce risk and integrate AI capabilities into risk and security management. The image below from the report highlights the current hype surrounding GenAI and the hopes for the big changes that will come with it. The report pictures the balance companies should consider when implementing GenAI.

🤔 Fraud debate question of the week:

What does the recent DeepSeek AI case teach us about AI IP?